Cutting Edge Technologies for Practical Solutions

AI • NLP • ML • SES

Our technology integrates state-of-the-art methods and our expertise has been largely successful in a wide range of problems and tasks. Reveal is exploiting technologies in new ways to overcome usual lexical approach limitations. Our research effort is focused on producing practical and robust solutions strongly needed by organizations.

Neural Networks for Complex Predictions

Inductive models such as Neural Networks and their ability to deal with heterogeneous, noisy, multimodal and unstructured evidence are thus a crucial technology for modern business models.

The complexity of modern business management is strongly determined by the large volumes of data managers are required today to analyze before decision-making may happen. Deep learning typically refers to a set of neural approaches that infer complex hierarchical models to capture highly non-linear relationships in low-level input data and induce high-level concept recognition rules. They enable the analysis of very big and very complex data, such as images or videos, text data or other unstructured information. These methods have become very popular in many AI research areas, from Computer Vision to Natural Language Processing (NLP) as well as Planning, as for their excellent performances achieved over very simple input representations, e.g. basic image pixels.

The Reveal technology, based on neural paradigms, has been successfully applied in several heterogeneous predictive tasks, ranging from the automatic analysis of structure data obtained from sensors in Water treatment Plants, to the detection of emotions and sentiments from messages in Social Network to the automatic induction of Business Process Models from the analysis of documents in the Banking Domain. In all these use cases, neural paradigms enable effective data integrations between structured and unstructured sources from endogenous and exogenous sources.

Explainable Neural Networks for document driven decision making

The aim is to enable the user or developer of a neural solution to take a look into such a “black-box” in order to understand why a specific inference is provided.

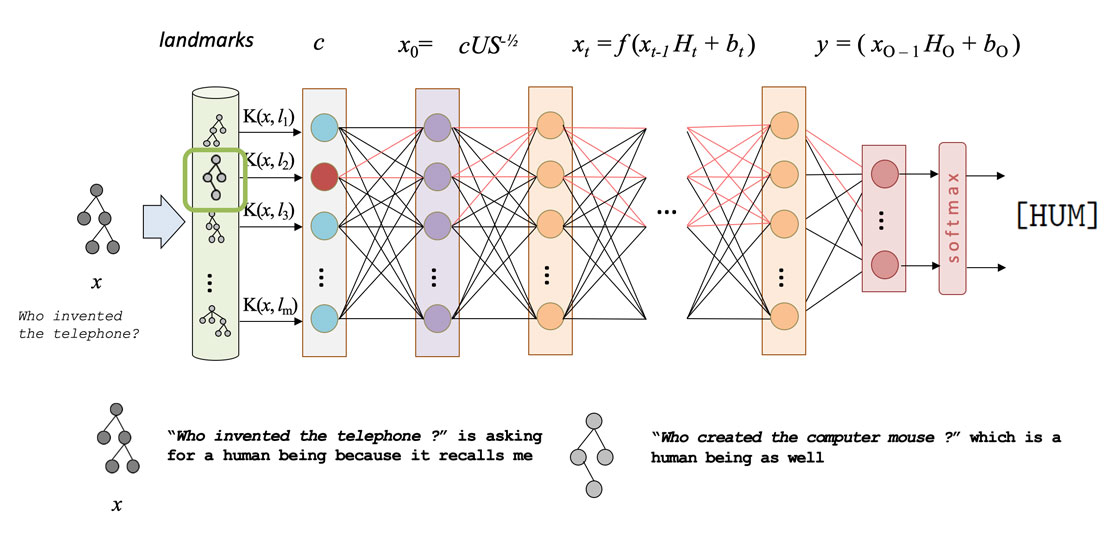

In the figure below, an example is reported related to a Question Classifier modeled as a multilayer perceptron (MLP): after the MLP decision (i.e., the categorization of the input question in its own class) our technology enables auditing acts such as the question “Why did you select this category?”. The answer to the auditing question is generated according to some realistic examples (selected among the training material) able to explain by analogy the reasons behind the decision. As a result, a machine is able to answer “Who invented the telephone ? is asking for a human being because it recalls me Who created the computer mouse ? which refers to a human being as well”

Paper Available at: https://www.aclweb.org/anthology/D19-1415

Ethical Machine Learning

This approach would allow Organizations to rely on machines whose ethics is guaranteed by Design

A deep learning machine generally minimizes the probability of providing incorrect decisions on new examples. This obviously strongly depends on the quality of the dataset adopted for training. Notice that this latter may be affected by bias (unrepresentative sample of the entire population) or other intrinsic limitations (unethical decisions stored by an organization but not usable for generalization). What about an AI-driven Digital Lending machine (AI DL) and the ethical implications of its own individual decisions? Risks are related to lending unevenly distributed across a population of requesters due to scarcely represented subgroups in the training data, and should be minimized in future behaviors of our AI DL.

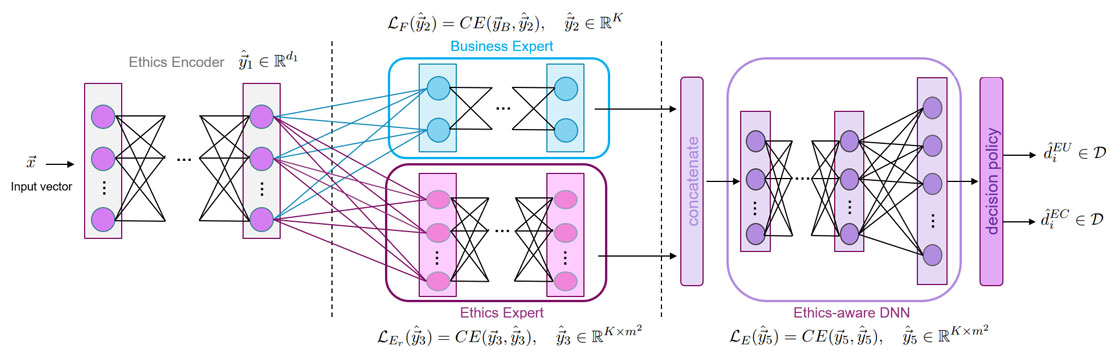

As a result, the business decisions are mediated by an Ethical-aware network

Our aim is to define training processes in a way they result compliant not only to the input dataset constraints but also to some a priori defined ethical principles. Such an approach would allow Organizations to rely on machines whose ethics is guaranteed by Design and resilient to possibly biased training sets. Details are in (Rossini et al., 2020, Actionable ethics through neural learning).

In the following picture, a standard architecture optimizing its own behavior from the “Business perspective” (the Business Expert) is extended to operate over data that are augmented with Ethic constraints (by the Ethics encoder). At the same time, an additional network instead capitalizes on such ethical principles (the Ethical Expert). As a result, their decisions are mediated by an Ethical-aware network which optimizes at the same time the business objectives, while satisfying ethical principles.

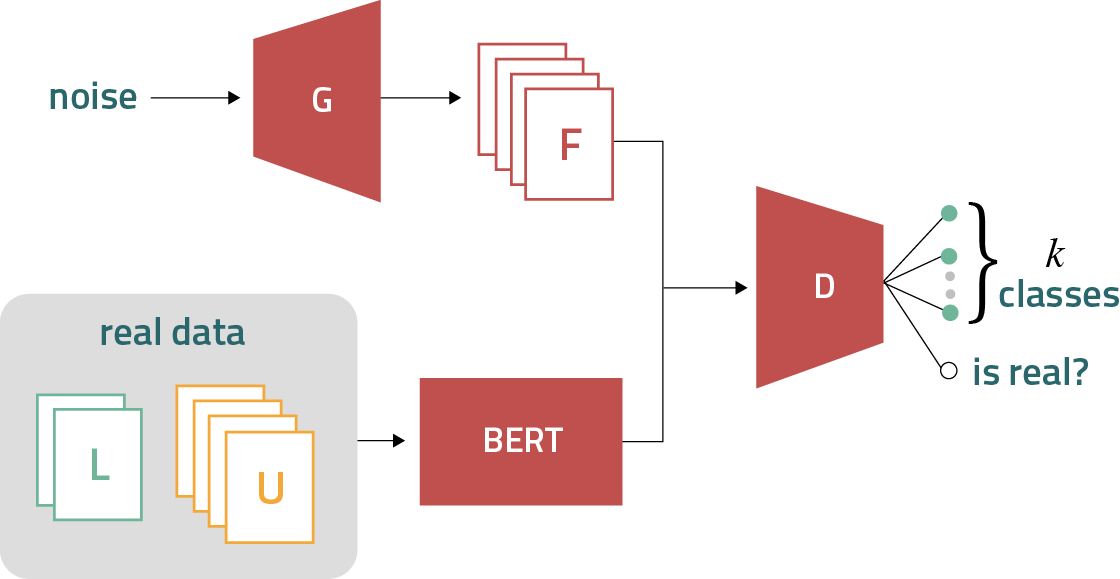

Semi-supervised learning in Transformer Based Architectures

While deep methods obtain state-of-the-art results in many tasks, they require a huge labeled training dataset in order to obtain robust solutions. Can an organization with large-scale collections of unlabeled data afford to develop models able to deal with hundreds of thousands of unseen examples? Reveal technology relies on research at the University of Roma, Tor Vergata, that extends state-of-the-art technologies (such as BERT) with Generative Adversarial training methods. This allows us to significantly minimize training costs, capitalize unlabeled data already available to an organization and reduce the costs and time required for deployments of effective models.

Integrated language and visual inference

The adopted neural technology processes an input image and generates its description one word at a time.

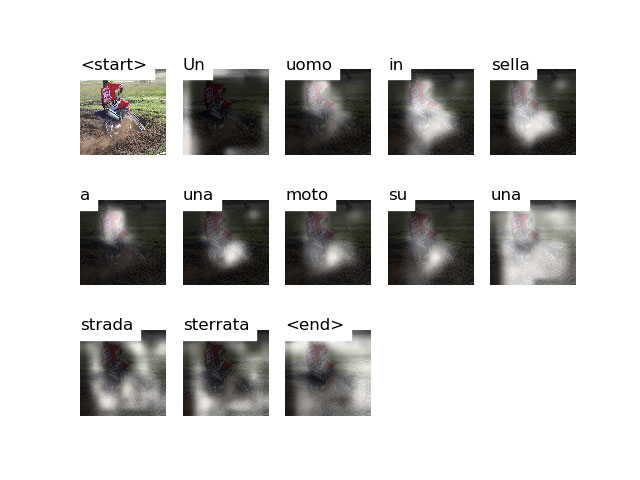

How can we retrieve images or videos using natural language? How can we convey the information represented in a picture? In reveal we extract and combine multimodal information depicted in multimedia items, using complex neural architecture, e.g. automatically deriving the description of an image or video in Italian.

Neural networks such as Convolutional, Recurrent and Transformed-based NNs are involved

The adopted neural technology processes an input image such as the following one, and generates its description one word at a time, here “Un uomo in sella ad una moto su una strada sterrata”. It is worth noting how the network focuses on specific areas of the input image while generating each token (the white areas place over the thumbnails of the input).

Details about the technology can be found in: Scaiella et al., “Large scale datasets for image and video captioning in Italian”, IJCoL 2020.